- JMP User Community

- :

- Discussions

- :

- Re: Computing std devs for Control Charts?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Computing std devs for Control Charts?

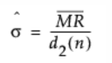

Hi. I have a question for any 6-sigma folks out there. In the Quality and Process Methods section of the User Manual, under "Statistical Details for Control Chart Builder", the standard deviation seems to be approximated using the range. For example, for XmR charts, the standard dev formula is:

where MR-bar is the average of the moving range and d2 is a value from a lookup table.

I'm not unfamiliar with this methodology for computing sigma and have seen it used in several other process control references. My question, though, is why? That is, why do we approximate it using this method, when we could instead simply use the traditional formula for computing sigma:

I understand that technically, any computation of sigma is an estimate of the "true" value, so perhaps one might argue that one formula is as good as another. However, the latter formula comes from the actual definition of standard deviation. I also understand there's the question of biased vs unbiased and the Bessel correction factor (N-1), but I feel the same issue would apply to both formulas, yes? Lastly, I understand that the latter formula requires more computation steps. Computation speed might have been an issue many decades ago, but that doesn't seem like and especially valid reason anymore.

Perhaps I'm wrong about one or more of my assumptions above, but if so, I'd love to know why. I've searched around the internet and other references, and I've never been able to come up with a satisfactory answer. Does anyone know?

Thanks, and Happy New Year!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Computing std devs for Control Charts?

I seem to have touched a nerve. If so, sorry. Nonetheless, I'm now in agreement. Please see my reply above.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Computing std devs for Control Charts?

There is also another very practical reason why sigma was estimated using the average moving range, or the average range. It was very easy to setup an understandable and organized data collection sheet (paper-based) for recording results, calculating ranges, moving ranges, average moving range, and from that the estimate of sigma, all with basic arithmetic and hand calculations. In a time before handheld calculators or computers, this was often the easiest way to do this type of analysis. The MR based estimate is slightly less "good", statistically speaking, than the regular method, but practically they are adequate in most cases.

Some benefits and cautions about using the MR or range based approaches to determine sigma:

- The MR based sigma estimate will be estimating "short-term" variation between subgroups, and within-subgroup range based estimation will be focused on within batch variation, mostly. If you want your SPC method to sensitive to shifts in the process mean, then MR based or range based sigma estimates approach may help provide that sensitivity.

- However, if you do not need that level of sensitivity, and if your process data is subject to many shifts in the process mean, then using the sum of squared error from the mean approach for determining S (the regular definition everyone learns usually) may be more appropriate. In JMP, the control chart that uses the regular definition of S for the control limits is called the "Levey Jennings" chart.

Ultimately, the choice of the control chart method depends on how the data is collected, if and what rational subgroups you have, and how sensitive you want your SPC procedure to be.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Computing std devs for Control Charts?

I've stayed on the sidelines on this thread but am in general agreement with both @SamGardner and @statman 's points. And I see @nikles you've come around to a rationalized conclusion. So I'll just try and add a way that I always thought about the MR (bar) method vs. the classic standard deviation calculation. The purpose of sigma in this case is to provide the best estimate of an unknown quantity but that quantity represents our best process based knowledge of common cause variation. Since rational subgroups are impossible in some situations, the next closest rational subgroup are successive observations as long as we are willing to make some assumptions that that common cause variation is stable from one observation to the next across the sample space. That's the reason @statman insists on plotting the data...to evaluate this stability property. Now here's my way of thinking about this...with stability, and using a moving average construct, I liken this conceptually to bootstrap sampling from a stable unknown population. And bootstrap estimates are almost always a great way to go if you can use them. My logic may be faulty...but it's how I think about the many issues at play here.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Computing std devs for Control Charts?

Interesting conversation. Can I recommend that you check out our new SPC e-course which explains this exact topic? You can also refer any other users who might have the similar question to this course. This subject is covered in lesson 2 of the course.

JMP Statistical Process Control Course

- « Previous

-

- 1

- 2

- Next »

- © 2024 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- About JMP

- JMP Software

- JMP User Community

- Contact