- JMP User Community

- :

- Learn JMP

- :

- Hands-On Activities

- :

- Predictive Modeling Hands-on Activities Solutions

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

These hands-on activities allow you to practice predictive modeling using JMP. This post contains the predictive modeling hands-on activities, the data, and a PDF file of these solutions in English.

Continuous response, continuous predictors

- Use the data in PM 1.jmp to build predictive models. These data were collected over time.

- Visualize the data using Graph Builder and Multivariate. Are there any data problems?

1) Open PM 1.jmp.

Build a time plot

2) Select Graph > Graph Builder.

3) Drag Y to the Y zone.

4) Right-click Y and select Row > Row.

5) Drag Row to the X zone.

6) Click Done.

The data appear to be correlated over the rows. In this case, the rows correspond to time order. The mean and variability of the response appear to be constant over time. Add a column switcher to reproduce this graph for the predictor variables.

7) Click the red triangle next to Graph Builder and select Redo > Column Switcher.

9) Select all columns from the Select Replacement Columns box.

10) Click OK.

11) Click on each variable in the Column Switcher in turn.

Many variables appear autocorrelated. No other data problems are evident. Save the script to the data table.

12) Click the red triangle next to Graph Builder and select Save Script > To Data Table.

13) Enter Time Plot for the name.

14) Click OK.

Build a scatter plot

1) Select Graph > Graph Builder.

2) Drag Y to the Y zone.

3) Drag X1 to the X zone.

4) Click Done.

The relationship between Y and X1 appears strong, positive, and linear. Add a column switcher to reproduce this graph for other predictors.

5) Click the red triangle next to Graph Builder and select Redo > Column Switcher.

6) Select all columns in the Select Replacement Columns box.

7) Click OK.

Other relationships are also strong. Save the script to the data table.

9) Click the red triangle next to Graph Builder and select Save Script > To Data Table.

10) Enter Y vs. Predictors for the name.

11) Click OK.

Another way to view the relationships among variables is with the Multivariate platform.

Multivariate

1) Select Analyze > Multivariate Methods > Multivariate.

2) Select all columns, then click Y, Columns.

3) Click OK.

There are many large positive (blue) and negative (red) correlations among the variables.

The same scatter plots that you created in Graph Builder can be seen all at once in the Scatterplot Matrix.

4) Click the red triangle next to Multivariate and select Color Maps > Color Map on Correlations.

This color map duplicates the information in the correlation matrix but might be easier to read.

No data problems other than the autocorrelation, are detected. Save the script to the data table.

5) Click the red triangle next to Multivariate and select Save Script > To Data Table.

6) Click OK.

7) Save the data table.

b. Build a response surface model using Fit Model and the Response Surface macro. What is the R2 of the full model? Are there any problems with the model fit seen in the Residual by Predicted plot? Which predictor variables are most important for predicting Y? Save the prediction formula to the data table.

1) Select Analyze > Fit Model.

2) Select Y, then click Y.

3) Select X1 through X10, then click Macros > Response Surface.

4) Click Run.

5) Close the Effect Summary report.

R2 is about 89%, meaning that 89% of the variability in Y is explained by this model. One standard deviation of the unexplained variability is about 1.6. Check model assumptions.

6) Click the red triangle next to Response Y and select Row Diagnostics > Plot Residual by Predicted.

No problems are seen in the residual plot. Plot the residuals in time order (row order).

7) Click the red triangle next to Response Y and select Row Diagnostics > Plot Residual by Row.

Autocorrelation in the residuals is also seen. Examine the Actual by Predicted plot to see how much the autocorrelation affects the predictions.

No bias is seen in the predictions.

Because there are so many observations, looking at p-values to determine variable importance indicates that all variables are important. See the Parameter Estimates report. To find the most important variables, examine the Effect Summary report.

9) Open the Effect Summary outline.

The most important variables for predicting Y are X3, X1, X4, X2, and X5. All main effects and many two-factor interactions are important.

Save the prediction formula.

10) Click the red triangle next to Response Y and select Save Columns > Prediction Formula.

11) Return to the data table and rename the last column as Pred Y RS.

12) Save the data table.

c. Build a neural network model using the default settings of the Neural platform. What is the R2 of the model on the validation set? Are there any problems with the model fit seen in the Residual by Predicted plot? Fit another model with 50 nodes. Does this model have appreciably better predictive capability? Save the prediction formula to the data table.

1) Select Analyze > Predictive Modeling > Neural.

2) Select Y, then click Y, Response.

3) Select X1 through X10, then click X, Factor.

4) If you want your results to match this solution (Windows), enter 98765 as the random seed.

5) Click OK.

6) Click Go.

R2 on the validation set is about 88%. Examine the model diagnostics.

7) Click the red triangle next to Model NTanH(3) and select Plot Residual by Predicted.

No model problems are evident. Fit a model with more nodes to see if it improves the fit.

9) Open the Model Launch outline.

10) Enter 50 in the Hidden Nodes box.

11) Click Go.

R2 on the validation set has increased by less than one percent. RASE and Mean Abs Dev are lower in the second decimal place. The model is not appreciably better.

Note: JMP Pro has the capability of adding nodes of other activation functions. If you have nonstationary data collected over time, that is, if the mean is drifting over time, it is recommended to add one linear node as well. For this data set, because the autocorrelation is stationary, adding one (or more) nodes with linear activation functions does not improve the predictive power of the model appreciably.

Save the prediction formula for the simpler model, then save the analysis.

12) Click the red triangle next to Model NTanH(3) and select Save Profile Formulas.

13) Return to the data table and rename the last column as Pred Y NN.

14) Return to the Neural report, then click the red triangle next to Neural and select Save Script > To Data Table.

15) Click OK.

16) Save the data table.

d. Build a decision tree model using the Partition platform with a 25% validation set. What is the R2 of the model on the validation set? How many splits are in the model? Are there any problems with the model fit seen in the Actual by Predicted plot? Which variables are most important for predicting Y? Save the prediction formula to the data table.

1) Select Analyze > Predictive Modeling > Partition.

2) Select Y, then click Y, Response.

3) Select X1 through X10, then select X, Factor.

4) For Validation Portion, enter 0.25.

5) Click OK.

Your results will be slightly different because your validation data are different.

6) Click Go.

The data are split until the next 10 splits do not improve R2 on the validation set, then the model is pruned back. R2 on the validation set is about 79%, a decrease from the linear model or the neural network model. There were 111 splits.

Assess the model.

7) Click the red triangle next to Partition for Y, then select Plot Actual by Predicted.

There are no problems evident in the model fit.

The report is ordered by the portion of variability in Y explained by the predictors. X3, X1, and X4 are the top three predictors.

Save the prediction formula.

9) Click the red triangle next to Partition for Y, then select Save Columns > Save Prediction Formula.

10) Click the red triangle next to Partition for Y, then select Save Script > To Data Table.

11) Click OK.

12) Return to the data table and rename the last column to Pred Y DT.

13) Save the data table.

e. Build an Actual by Predicted graph for the three models using Graph Builder. Which model do you prefer?

1) Select Graph > Graph Builder.

2) Drag Y to the Y zone.

3) Drag Pred Y RS, Pred Y NN, and Pred Y DT to the X zone together.

4) Right-click in the graph and select Customize.

5) Click the plus sign icon

6) Enter y function(x, x); in the script editor box.

7) Click OK.

9) Click Done.

10) If you are going to publish this graph, change the graph title to Actual by Predicted, change the X axis title to Predicted, and change the markers for each X variable.

The fits are fairly similar. The decision tree model gives chunky predictions because it is using discrete leaves to predict a continuous response.

Categorical response, continuous and categorical predictors

- Use the data in PM 2.jmp to build predictive models.

- Visualize the data using Graph Builder. Are there any data problems?

1) Open PM 2.jmp.

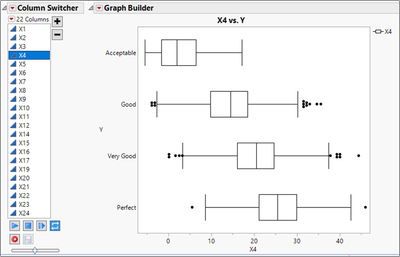

2) Select Graph > Graph Builder.

3) Drag Y to the Y zone.

4) Drag X1 to the X zone.

5) Select the Boxplot element on the Elements bar.

6) Click Done.

7) Click the red triangle next to Graph Builder and select Redo > Column Switcher.

9) Click OK.

Several strong relationships between Y and the predictors can be seen.

Build visualizations for the categorical predictors.

10) Select Graph > Graph Builder.

11) Drag Y to the Y zone.

12) Drag X13 to the X zone.

13) Drag X18 to the X axis to the right of X13.

14) Select the Heatmap element from the Elements bar.

15) Click Done.

There appears to be an association between Y and both categorical predictors.

No data problems are evident in either graph.

b. Build an ordinal regression model using Fit Model and the Response Surface macro. What is the misclassification rate of the full model? Hint: open the Fit Details report. What is the misclassification rate for the Acceptable group? Hint: open the Confusion Matrix report.

1) Select Analyze > Fit Model.

2) Select Y, then click Y.

3) Select X1 through X24, then click Macros > Response Surface.

4) Click Run.

5) Close the Effect Summary outline.

6) Open the Fit Details outline.

The misclassification rate is about 16%.

7) Click the red triangle next to Ordinal Logistic Fit for Y and select Confusion Matrix.

The misclassification rate for the Acceptable group is 1 – 0.75 = 0.25. Five Acceptable observations were classified as Good.

c. Build a neural network model using the default settings of the Neural platform. What is the misclassification rate of the model on the validation set? In particular, what is the misclassification rate of the Acceptable group? Fit another model with 50 nodes. What is the misclassification rate of the model on the validation set? What is the misclassification rate of the Acceptable group?

1) Select Analyze > Predictive Modeling > Neural.

2) Select Y, then click Y, Response.

3) Select X1 through X24, then click X, Factor.

4) If you want your results to match this solution (Windows), enter 11793 as the random seed.

5) Click OK.

6) Click Go.

The misclassification rate on the validation set is about 23%. The misclassification rate of the Acceptable group is 100%. All seven observations in the Acceptable group were misclassified as Good. All thirteen observations in Acceptable group in the Training data were also misclassified as Good.

7) Open the Model Launch outline.

9) Click Go.

The misclassification rate on the validation set is again about 23%. The misclassification rate of the Acceptable group is about 29%.

d. Build a decision tree model using the Partition platform with a 25% validation set. What is the misclassification rate of the model on the validation set? What is the misclassification rate of the Acceptable group?

1) Select Analyze > Predictive Modeling > Partition.

2) Select Y, then click Y, Response.

3) Select X1 through X24, then click X, Factor.

4) For the Validation Portion, enter 0.25.

5) Click OK.

6) Click Go.

7) If needed, to remove the tree from the output, click the red triangle next to Partition for Y and select Display Options > Show Tree.

Your results will vary due to the random nature of the holdout procedure. The misclassification rate on the validation set is about 27%. The misclassification rate of the Acceptable group on the validation set is about 57%. About half the observations in the Acceptable group were misclassified as Good.

Recommended Articles

- © 2024 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- About JMP

- JMP Software

- JMP User Community

- Contact