- JMP User Community

- :

- Blogs

- :

- JMPer Cable

- :

- Turn many small decisions into one big process optimization success

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

How do you apply design of experiments (DOE) to a process? Join systems engineer Tom Donnelly on March 18, when he presents a concise 30-minute demo and discussion on Optimizing Processes Using Designed Experiments as part of the Mastering JMP webinar series.

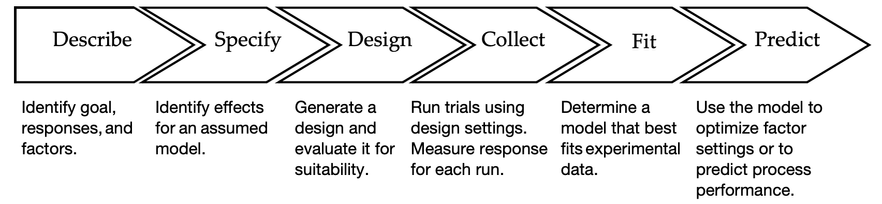

Tom will cover a six-step framework for creating a successful designed experiment that can be run in your work environment. The steps?

- Describe the goal, factors and responses.

- Specify an assumed model that you believe adequately describes the physical situation.

- Generate and evaluate a design that is consistent with your assumed model.

- Incorporate trial run measurements into the design.

- Determine the best model (often a subset of the proposed model).

- Use model results to optimize factor settings and/or predict performance.

As part of the JMP team supporting US defense and aerospace customers, Tom attends and/or presents annually at the Military Operations Research Symposium (MORS) where he demonstrates how to use JMP to attack problems. He and some of his JMP colleagues are also presenting at an in-person conference, DATAWorks 2020 (Defense and Aerospace Test and Analysis Workshop) on April 26-28, 2022. Those sessions are:

- Mixed Models (Tutorial) by Elizabeth Claassen, JMP Research Statistician Developer

- Self-Validating Ensemble Modeling by Chris Gotwalt, JMP Chief Data Scientist

- Functional Data as Both Input to & Output from a Glycemic Response Model by Tom Donnelly, JMP Principal Systems Engineer

I recently spoke with Tom about the types of challenges his customers encounter and how he helps guide them through the DOE process. Plus, he revealed a bit about his National Park explorations.

In his upcoming Mastering JMP webinar, Tom uses a case study approach that incorporates some of the requisite questions and answers that are critical to the success of a designed experiment. He listed for me some of the questions for the first three steps in the framework. I invite you to think about them for your work environment and register for his March 18 Mastering JMP webinar.

Describe

- What is the goal of the experimentation?

- How do you measure success?

- What response variables do you measure?

- If more than one response needs to be characterized for your process, what are the relative levels of importance?

- What kind of control factors do you have?

- Continuous (Quantitative) – varies over a range.

- Categorical (Qualitative) – varies as different levels.

- Discrete Numeric – analyzes like a continuous factor, but only available at discrete levels, like an ordinal categorical factor.

- Mixture or formulation factor – behaves like a continuous factor, but all mixture component proportions are constrained to sum to (typically) 1.00.

- Blocking factors – groupings of trials such as day or batch that should not have an effect. We add blocking factors to see if the process shifts between groups as an indicator of unknown or lurking factors being correlated with the blocks.

- Over what ranges does it make sense to operate the control factors?

- Too bold may break the process.

- Too timid may not generate a sufficiently large effect.

- Don’t know? Involve subject matter experts on the process.

- Are there potentially important factors that can’t be controlled?

- Can any uncontrollable factors be monitored so that the settings can be captured and recorded (e.g., ambient temperature, humidity, operator at time of trial)? These can be treated as covariate factors.

- Does the process drift over the course of the day or period being measured?

- How many trials can be run in a day? Will multiple days be required?

- Do you typically run control samples for this process?

- Will trials be run in batches or groups?

- Are there any hard-to-change factors, and if so, which ones?

- How many devices do you have of each type?

- Do you have historical data that can be “data mined” for possible factors and to better understand factor ranges?

- Are these real experiments or are they computer simulations?

- If simulations, are they deterministic (same answer every time), or stochastic (randomness built in so answer is slightly different each time)?

Specify

- Are you looking to identify important main effects from a large set (e.g., 6+) of factors?

- Are you looking to build a predictive model with which to characterize and optimize a process? NOTE: These two questions determine if the proposed model will be 1st order or 2nd order.

Design

- What is your budget?

- What is your deadline?

- Does every trial cost about the same (i.e., take the same amount of time to setup and run)?

- Does getting setup to run the first trial cost substantially more than running the next few? Whenever initial setup is large, consider adding extra trials (replicates or especially checkpoints) while they are cheap to run.

- Do any combinations of variable settings cause problems (e.g., unsafe, too costly, breaks the equipment/process, impossible to achieve)?

- Will you need to constrain the design space or disallow certain combinations of factor settings?

- If you run the same process on separate days, do you ever get obviously/surprisingly different results?

- Do you have past records of replicated trials for each response?

- Are the replicate trial response values close together or spread out over time?

- How big is the variability for each response? That is, what is the standard deviation or root-mean squared error (RMSE) of the response?

- How tiny of a difference for each response is considered practically important?

- For each response, do you think you are looking for tiny differences in big variability (hard to do because lots of replication is needed) or big differences in small variability (easy to do)?

- What is the desired level of confidence in detecting effects? This is typically 95%, which leads to setting alpha at 0.05 (Type I error).

- What are acceptable levels of power for the various types of effects (main, interaction, quadratic, categorical levels)? NOTE: This is the desired level of confidence in NOT missing an effect if it is real. It is typically 0.8 for main effects and interactions, and less for quadratic effects (Type II error).

- How hard is it to come back later to run checkpoint trials? Can you build in checkpoint trials now – especially if they are inexpensive to run? If so, where?

- Your guess at where the best performance will occur.

- Your guess at where the poorest performance will occur.

- Your boss’ opinion as to where to run the process.

- Add a trial to support the next higher model.

- Some points outside the design region.

- What trial do you think is most likely to break the design? NOTE: Perhaps run that trial first.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- © 2024 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- About JMP

- JMP Software

- JMP User Community

- Contact